Writing a VFS plugin for network-packet access

Despite Genode already providing a socket API that is backed by two different Virtual File System (VFS) plugins, we have no mechanism for sending/receiving raw Ethernet frames via the libc yet. In Linux and FreeBSD, the kernel provides virtual TAP devices as an interface for this purpose. We can easily emulate this interface by implementing a new VFS plugin. In this article, I will share my experiences with this particular plugin and provide some insights in the internals of the VFS.

When porting software from the Unix world to Genode, we try to keep modifications of the 3rd-party code to a minimum. An essential part of this consists in providing the required libraries (e.g., libc, stdcxx). But, even with all libraries in place, we also need to bridge the gap between the Unix viewpoint of "everything is a file (descriptor)" and the Genode world of session interfaces. This is where the VFS comes into play: Genode’s C runtime (libc) maps file operations to the component’s VFS. Let’s have a look at a common example:

<config>

<libc stdout="/dev/log"/>

<vfs>

<dir name="dev"> <log/> </dir>

</vfs>

</config>

This component config tells the libc to use /dev/log for stdout and use the built-in log plugin of the VFS to "connect" /dev/log to a LOG session. You can find a good overview on how to configure the VFS and what plugins are available in Martin’s article series.

In this article, I concentrate on how to write a new plugin for the VFS by implementing a plugin for raw network access. Before we jump into the code right away, I will first sketch an overview on how TAP devices are used on FreeBSD/Linux and how this maps to the VFS architecture.

Edit 2025-05-21: A regularly updated version of this article is available in the Genode Applications book on genode.org.

TAP-device foundations

Genode’s C runtime is based on a port of FreeBSD’s libc. We therefore have a look at how TAP devices are used on FreeBSD before glancing at Linux.

On FreeBSD, we simply open an existing TAP device (e.g. /dev/tap0) and are able to write/read to the acquired file descriptor afterwards. In addition, there are a few I/O control operations (ioctl), by which we can get/set the MAC address or get the device name for instance. Let’s look at an example:

#include <net/if.h>

#include <net/if_tap.h>

/* [...] */

int fd0 = open("/dev/tap0", O_RDWR);

if (fd0 == -1) {

printf("Error: open(/dev/tap0) failed\n");

return 1;

}

int fd1 = open("/dev/tap1", O_RDWR);

if (fd1 == -1) {

printf("Error: open(/dev/tap1) failed\n");

close(fd1);

return 1;

}

char mac[6];

memset(mac, 0, sizeof(mac));

if (ioctl(fd1, SIOCGIFADDR, (void *)mac) < 0) {

printf("Error: Could not get MAC address of /dev/tap0.\n");

close(fd0);

close(fd1);

return 1;

}

enum { BUFFLEN = 1500 };

char buffer[BUFFLEN];

while (1) {

ssize_t received = read(fd0, buffer, BUFFLEN);

if (received < 0) {

close(fd0);

close(fd1);

return 1;

}

ssize_t written = write(fd1, buffer, received);

if (written < received) {

close(fd0);

close(fd1);

return 1;

}

}

We have just created an application that forwards Ethernet frames from tap0 to tap1. For demonstrative purpose, I added an ioctl call for getting the MAC address of tap0. A detailed description of TAP devices in FreeBSD is given in the corresponding man page.

In Linux, the interface is a bit different. You first open /dev/net/tun and create the device by a TUNSETIFF ioctl. This process can be conducted several times to create multiple devices. A detailed description can be found in the Linux kernel documentation.

Actually, you can also open the generic_/dev/tap_ in FreeBSD, which will return a file descriptor to the first available device. If only a single TAP device is used, however, we can get away with mapping our emulated TAP device to /dev/tap or /dev/net/tun. For starters, let’s therefore focus on the simpler use case for our VFS plugin.

Architecture

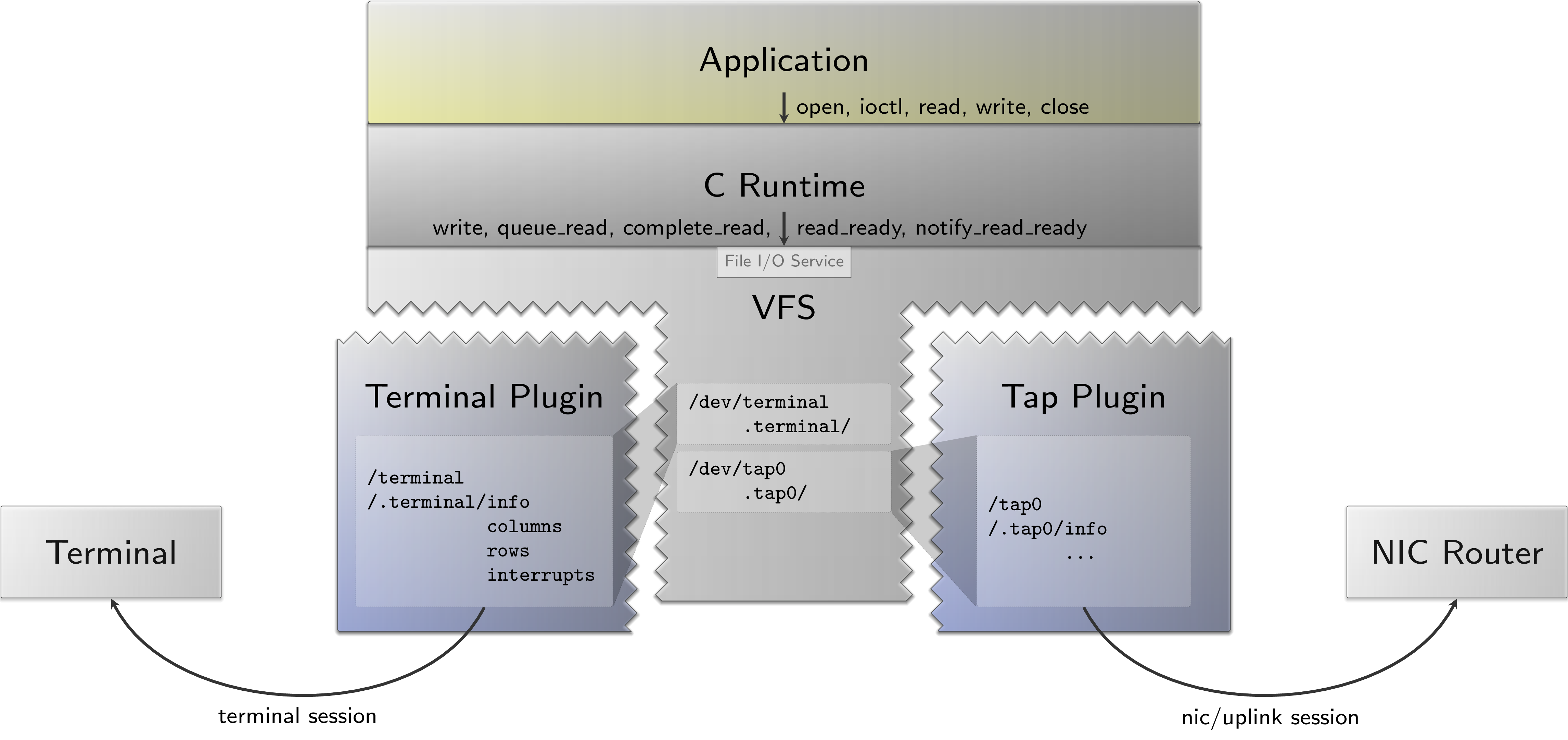

Before we dive into the VFS, let me draw a high-level picture of how Genode’s C runtime maps file operations to the VFS.

|

The figure above illustrates the plugin structure of the VFS. A plugin provides one or multiple files (e.g. /dev/terminal) that are incorporated into the directory tree of the VFS. The application is then able to perform the standard file operations on these files. The VFS plugin typically translates these operations into operations on a particular session interface (e.g., terminal session). The C runtime also emulates ioctl by mapping these to read/write accesses of pseudo files (e.g. /dev/.terminal/…), see the release notes 20.11 or the corresponding commit message. By convention, an info (e.g. /dev/.terminal/info) file will contain an XML report containing a single XML node named after the plugin type. The node may contain any number of attributes to specify parameters needed by the C runtime to implement the particular ioctl, e.g.:

<terminal rows="25" columns="80/>

In case parameters shall be modifiable, the info file can be accompanied by a separate (writeable) file for each modifiable parameter.

Internally, the C runtime uses the non-blocking Vfs::File_io_service interface to perform read/write accesses on the VFS. The write() operation returns an error if writing cannot be performed immediately. Reads are split into queue_read() and complete_read() methods. In order to avoid futile polling, the latter are accompanied by a read_ready() method, which returns true if there is readable data, and a notify_read_ready() method by which one is able to announce interest in receiving read-ready signals.

At the same time, the C runtime takes care of emulating the blocking semantics of read/write operations that we have seen in our example in the beginning. I will come back to this down below.

Usage preview

Before we start coding, let’s envision how we want to use the plugin:

<config>

<vfs>

<dir name="dev">

<tap name="tap0" label="tap0" mode="uplink_client"

mac="11:22:33:44:55:66" />

<tap name="tap1" label="tap1" />

</dir>

</vfs>

</config>

In the above example, we mount the plugin twice to create /dev/tap0 and /dev/tap1. The name attribute of the <tap> node is mandatory. The plugin shall either use a NIC session or an uplink session to transmit the Ethernet frames to a NIC router. This is configured by the optional mode argument. In case the plugin acts as an uplink client, we can specify the default MAC address to be used. Otherwise, the MAC address is assigned by the NIC router. The label attribute can be used to distinguish the session requests at the NIC router.

Please note that I am going to omit most attributes when walking through the plugin implementation to keep the presentation rather concise.

Writing the vfs_tap plugin

Alright, now to the fun part. Let’s start with preparing the stage for our plugin by creating a subdirectory. Since I do not expect any dependencies other than base and os, I create it in the os repository.

genode/repos/> mkdir -p os/src/lib/vfs/tap

The path suggests that the plugin is supposed to be built as a library. Note that the type of the XML node is used by the VFS to determine the name of the plugin library to probe. More precisely, when adding a <tap> node to the config, the VFS tries to load a vfs_tap.lib.so. Hence, the build-description file goes into os/lib/mk/vfs_tap.mk with the following content:

SRC_CC := vfs_tap.cc vpath %.cc $(REP_DIR)/src/lib/vfs/tap SHARED_LIB := yes

Since libraries are only compiled implicitly, i.e., when required as a dependency, we can add a dummy target.mk file to os/src/lib/tap, which will enable us to call make lib/vfs/tap:

TARGET = dummy-vfs_tap LIBS = vfs_tap

Now, let’s add the first few lines to vfs_tap.cc:

namespace Vfs {

struct Tap_file_system;

}

struct Vfs::Tap_file_system

{

using Name = String<64>;

struct Compound_file_system;

struct Local_factory;

struct Data_file_system;

};

/* [...] */

extern "C" Vfs::File_system_factory *vfs_file_system_factory(void)

{

struct Factory : Vfs::File_system_factory

{

Vfs::File_system *create(Vfs::Env &env, Genode::Xml_node config) override

{

return new (env.alloc())

Vfs::Tap_file_system::Compound_file_system(env, config);

}

}

static Factory f;

return &f;

}

From the forward declarations, we can already see that the Tap_file_system is composed of three parts: a Compound_file_system, a Local_factory and a Data_file_system. This is a scheme that we commonly apply when writing VFS plugins. We will walk through each of those step by step. Note that we could also make Tap_file_system a namespace rather than a struct. Though, the subtle difference here is that the struct emphasises the inextensibility.

The plugin’s entrypoint is the vfs_file_system_factory method that returns a File_system_factory by which the VFS is able to create a File_system from the corresponding XML node (e.g. <tap name="tap0"/>). We return a Compound_file_system which serves as a top-level file system and which is able to instantiate arbitrary sub-directories and files on its own by using VFS primitives. Let’s have a closer look:

class Vfs::Tap_file_system::Compound_file_system : private Local_factory,

public Vfs::Dir_file_system

{

private:

typedef Tap_file_system::Name Name;

typedef String<200> Config;

static Config _config(Name const &name)

{

char buf[Config::capacity()] { };

Genode::Xml_generator xml(buf, sizeof(buf), "compound", [&] () {

xml.node("data", [&] () {

xml.attribute("name", name); });

xml.node("dir", [&] () {

xml.attribute("name", Name(".", name));

xml.node("info", [&] () {});

});

});

return Config(Genode::Cstring(buf));

}

public:

Compound_file_system(Vfs::Env &vfs_env, Genode::Xml_node node)

:

Local_factory(vfs_env, node),

Vfs::Dir_file_system(vfs_env,

Xml_node(_config(Local_factory::name(node)).string()),

*this)

{ }

static const char *name() { return "tap"; }

char const *type() override { return name(); }

};

The Compound_file_system is a Dir_file_system and a Local_factory. The former allows us to create a nested directory structure from XML as we are used to when writing a component’s <vfs> config. In this case, the static _config() method generates the following XML:

<compound>

<data name="foobar"/>

<dir name=".foobar">

<info/>

</dir>

</compound>

The type of the root node has no particular meaning, yet, since it is not "dir", it instructs the Dir_file_system to allow multiple sibling nodes to be present at the mount point. In particular, this is a data file system and a subdirectory containing an info file system. The latter has a static name, whereas the subdirectory and data file system are named after what the implementation of Local_factory returns. Already knowing how the C runtime interacts with the VFS, we can identify that the data file system shall provide read/write access to our virtual TAP device whereas the subdirectory is used for ioctl support. The info file system follows the aforementioned convention and provides a file containing a <tap> XML node with a name attribute.

Note, the type() method is part of the File_system interface and must return the XML node type to which our plugin responds.

Next, we must implement the Local_factory. As the name suggest, it is responsible for instantiating the file systems that we used in our Compound_file_system, i.e. the data and info file system:

struct Vfs::Tap_file_system::Local_factory : File_system_factory

{

Vfs::Env &_env;

Name const _name;

Data_file_system _data_fs { _env.env(), _name };

/* [...] see below */

In the first few lines of our Local_factory, we already see the instantiation of the data file system. You have already seen the forward declaration of Data_file_system in the beginning. I will come back to this after we completed the Local_factory. Let’s rather continue with the info file system:

struct Vfs::Tap_file_system::Local_factory : File_system_factory

{

/* [...] see above */

struct Info

{

Name const &_name;

Info(Name const & name)

: _name(name)

{ }

void print(Genode::Output &out) const

{

char buf[128] { };

Genode::Xml_generator xml(buf, sizeof(buf), "tap", [&] () {

xml.attribute("name", _name);

});

Genode::print(out, Genode::Cstring(buf));

}

};

Info _info { _name };

Readonly_value_file_system<Info> _info_fs { "info", _info };

/* [...] see below */

For the info file system, we use the Readonly_value_file_system template from os/include/vfs/readonly_value_file_system.h. As the name suggests, it provides a file system with a single read-only file that contains the value of the given type. More precisely, the string representation of its value. In case of the info file system, we want to fill the file with <tap name="..."/>. Knowing that we are able to convert any object to Genode::String by defining a print(Genode::Output) method, we can use the Info struct as a type for Readonly_value_file_system and customise its string representation at the same time.

The remaining fragment of our Local_factory comprises the constructor, an accessor for reading the device name from the <tap> node and the File_system_factory interface.

struct Vfs::Tap_file_system::Local_factory : File_system_factory

{

/* [...] see above */

Local_factory(Vfs::Env &env, Xml_node config)

:

_env(env),

_name(name(config))

{ }

static Name name(Xml_node config)

{

return config.attribute_value("name", Name("tap"));

}

/***********************

** Factory interface **

***********************/

Vfs::File_system *create(Vfs::Env&, Xml_node node) override

{

if (node.has_type("data")) return &_data_fs;

if (node.has_type("info")) return &_info_fs;

return nullptr;

}

};

The create() method is the more interesting part. Here, we return either the data or info file system depending on the XML node type. The function is called by the Dir_file_system on the XML config defined by our Compound_file_system.

Note that mutable parameters (such as the MAC address) are typically provided as additional writeable files along with the info file. For this purpose, you can use the Value_file_system template from os/include/vfs/value_file_system.h together with Genode::Watch_handler to react to file modifications.

The last missing piece of our puzzle is the Data_file_system. Luckily, there is no need to take a deep dive into the VFS internals because Vfs::Single_file_system comes to our rescue. It already implements big parts of the Directory_service and the File_io_service interface, and leaves only a handful methods to be implemented by our Data_file_system. Let’s have a look at the first fragment:

class Vfs::Tap_file_system::Data_file_system : public Vfs::Single_file_system

{

private:

struct Tap_vfs_handle : Single_vfs_handle

{

/* [...] see below */

};

using Registered_handle = Genode::Registered<Tap_vfs_handle>;

using Handle_registry = Genode::Registry<Registered_handle>;

Genode::Env &_env;

Handle_registry _handle_registry { };

public:

Data_file_system(Genode::Env & env,

Name const & name)

:

Vfs::Single_file_system(Node_type::TRANSACTIONAL_FILE, name.string(),

Node_rwx::rw(), Genode::Xml_node("<data/>")),

_env(env),

{ }

static const char *name() { return "data"; }

char const *type() override { return "data"; }

/* [...] see below */

We skip the details of Tap_vfs_handle for the moment. This fragment is not very spectacular, though. We see that we use a Genode::Registry to manage the Tap_vfs_handle's. The 'Single_file_system constructor takes a node type, a name, an access mode and an Xml_node as arguments. For the node type, we can choose between CONTINUOUS_FILE and TRANSACTIONAL_FILE. Since a network packet is supposed to be written as a whole and not in arbitrary chunks, we must choose TRANSACTIONAL_FILE here. The file name is determined from the provided XML node by looking up a name parameter. Here, we pass an empty <data/> node, in which case, the Single_file_system uses the second argument as a file name instead.

Let’s continue with completing the Directory_service interface:

class Vfs::Tap_file_system::Data_file_system : public Vfs::Single_file_system

{

private:

/* [...] see above */

using Registered_handle = Genode::Registered<Tap_vfs_handle>;

using Handle_registry = Genode::Registry<Registered_handle>;

using Open_result = Directory_service::Open_result;

Genode::Env &_env;

Handle_registry _handle_registry { };

public:

/* [...] see above */

/*********************************

** Directory service interface **

*********************************/

Open_result open(char const *path, unsigned flags,

Vfs_handle **out_handle,

Allocator &alloc) override

{

if (!_single_file(path))

return Open_result::OPEN_ERR_UNACCESSIBLE;

try {

*out_handle = new (alloc)

Registered_handle(_handle_registry, alloc, *this, *this, flags);

return Open_result::OPEN_OK;

}

catch (Genode::Out_of_ram) { return Open_result::OPEN_ERR_OUT_OF_RAM; }

catch (Genode::Out_of_caps) { return Open_result::OPEN_ERR_OUT_OF_CAPS; }

}

}

The only method of the Directory_service interface not implemented by Single_file_system is the open() method. First, we use a helper method _single_file to check whether the correct path was given. Second, we allocate a new Tap_vfs_handle, which is conveniently put into the _handle_registry by using the Genode::Registered wrapper. The latter also takes care that the handle is removed from the registry on destruction. Note that we should also add a check here that ensures only a single handle is created since the FreeBSD man pages says that a TAP device is exclusive-open. I deliberately removed this check for readability, though.

The read and write operations are part of the File_io_service interface. This interface is already implemented by Single_file_system, which forwards most methods to Single_vfs_handle. Let's thus look at Tap_vfs_handle, which implements the read and write operations and translates them to the uplink or NIC session interface. I omit the details here though, since it would inflate this article a lot. Note that Single_file_system forwards complete_read() to the handle's read() method and always returns true for queue_read().

class Tap_vfs_handle : public Single_file_system::Single_vfs_handle

{

private:

using Read_result = File_io_service::Read_result;

using Write_result = File_io_service::Write_result;

bool _notifying = false;

bool _blocked = false;

void _handle_read_avail()

{

if (!read_ready())

return;

if (_blocked) {

_blocked = false;

io_progress_response();

}

if (_notifying) {

_notifying = false;

read_ready_response();

}

}

public:

Tap_vfs_handle(Allocator &alloc,

Directory_service &ds,

File_io_service &fs,

int flags)

: Single_vfs_handle { ds, fs, alloc, flags }

{ }

bool notify_read_ready() override

{

_notifying = true;

retrun true;

}

/************************

* Vfs_handle interface *

************************/

bool read_ready() override

{

/* [...] */

}

Read_result read(char *dst, file_size count,

file_size &out_count) override

{

if (!read_ready()) {

_blocked = true;

return Read_result::READ_QUEUED;

}

/* [...] */

return Read_result::READ_OK;

}

Write_result write(char const *src, file_size count,

file_size &out_count) override

{

/* [...] */

return Write_result::WRITE_OK;

}

};

First, we defined a helper method _handle_read_avail() that notifies the C runtime or the VFS server of any progress. There are two types of progress notifications: I/O progress and read ready. The latter we have already came across when mentioning the notify_read_ready() method of the File_io_service. In this implementation, we issue a read-ready response whenever the notify_read_ready() was called before on this file handle. Similarly, we keep track of whether a read() operation is unable to complete via the _blocking member variable. By issuing an I/O progress response, the C runtime is notified of the fact that it may retry the read operation. The last ingredient is inserting the proper result types: While READ_OK and WRITE_OK are self-explanatory, there are two common result types for unsuccessful reads/writes. On the one hand, READ_QUEUED indicates that a previously queued read cannot be completed yet. On the other hand, we may return WRITE_ERR_WOULD_BLOCK if, e.g., the submit queue of the NIC/uplink session's transmit channel is full.

Libc modifications

The Tap_vfs_handle completes the (simplified) implementation of our new VFS plugin. However, it still requires a few libc modifications to map the specific ioctl to the pseudo-file system.

The relevant part to modify resides in libports/src/lib/libc/vfs_plugin.cc. First, we modify the Libc::Vfs_plugin::ioctl() to forward the corresponding ioctl requests to a _ioctl_tapctl method that we are going to implement later.

int Libc::Vfs_plugin::ioctl(File_descriptor *fd, unsigned long request, char *argp)

{

switch (request) {

/* [...] */

case TAPSIFINFO:

case TAPGIFINFO:

case TAPSDEBUG:

case TAPGDEBUG:

case TAPGIFNAME:

case SIOCGIFADDR:

case SIOCSIFADDR:

result = _ioctl_tapctl(fd, request, argp);

break;

default:

break;

}

/* [...] */

Note, that I added all request that I know of irrespective of whether we intend to handle them in _ioctl_tapctl. Let’s have a look at the latter:

Libc::Vfs_plugin::Ioctl_result

Libc::Vfs_plugin::_ioctl_tapctl(File_descriptor *fd, unsigned long request, char *argp)

{

bool handled = false;

int result = 0;

if (request == TAPGIFNAME) { /* return device name */

if (!argp)

return { true, EINVAL };

ifreq *ifr = reinterpret_cast<ifreq*>(argp);

monitor().monitor([&] {

_with_info(*fd, [&] (Xml_node info) {

if (info.type() == "tap") {

String<IFNAMSIZ> name =

info.attribute_value("name", String<IFNAMSIZ> { });

copy_cstring(ifr->ifr_name, name.string(), IFNAMSIZ);

handled = true;

}

});

return Fn::COMPLETE;

});

}

/* [...] */

return { handled, result };

}

The result type Ioctl_result lets us distinguish whether the request was not handled or handled with/without errors. By the example of TAPGIFNAME, we see how the monitor pattern is applied in order to enqueue an I/O job and wait for its completion. The aforementioned I/O progress response, e.g., triggers the execution of pending jobs. The helper _with_info simplifies accessing the info file associated with the file descriptor.

Pitfalls

In this article, I deliberately left out quite a bit of implementation details to provide a more generic guide and blueprint for writing VFS plugins. The downside of this is that I was not able to convey some lessons learned, yet. Let’s make up for this in this separate section.

The current implementation allows reading and writing the MAC address. When using Net::Mac_address as type for the Value_file_system template, I got a compile-time error, though. Looking at the implementations clarified that the template expects the type to have a constructor taking 0 as an argument. However, this cannot be unambiguously resolved for Net::Mac_address, which defines Mac_address(int) and Mac_address(void*). Instead of modifying the template or Net::Mac_address, I opted for writing a simple Mac_file_sytem class that overrides the value() method of Value_file_system.

After I managed to compile the vfs_tap library and my test application, and gave both a spin, it got stuck on the second read() operation. In other words, the packet-available signal from the uplink session was not received. This left me pondering for a moment but, looking at other implementations, I noticed a difference: The Uplink_client_base that I copied and modified to implement the Tap_vfs_handler used a Signal_handler whereas other VFS plugins were using an Io_signal_handler. As I learned from the release notes 17.05, the C runtime defers applications signals as long as it remains in a blocking function like 'read()'and only receives I/O signals. Hence, using Io_signal_handler in my modified Uplink_client_base resolved this issue.

The last lesson I had to take was a page fault when closing a file descriptor in my test application. I actually needed to dig into this for a while to find the culprit. For implementing a Tap_vfs_handle that translates read/write operations to an uplink session, I inherited from Uplink_client_base in addition to Single_vfs_handle. Yet, I had not spent any thought on the order of the two base classes. Putting the Single_vfs_handle at second position caused the page fault on destruction. Why is that? There are actually multiple conditions that led to this. First, the Single_file_system implements the close() method by destroying the object pointed to by a Single_vfs_handle*. When Single_vfs_handle is the secondary base class, however, the pointer does not point to the start of the memory block that we allocated when creating our Registered_handle. Instead, it will have an offset. Deep down the base framework, this corner case was masked and thereby triggered the page fault. I spare you the very details though since, with release 22.02, there is a proper error message in place. The take-away lesson here is that we need to put Single_vfs_handle at first position in the list of base classes.

Johannes Schlatow

Johannes Schlatow